Advanced Video With More Context And Configuration

The cornerstone of any advanced video telematics system is an event video – the evidence of record for accidents and other incidents of interest, capturing both what happened leading up to the incident and what happened after. Incident videos can be captured automatically, via triggers from g-sensors or the vehicle bus (hard braking, etc.).

The cornerstone of any advanced video telematics system is an event video – the evidence of record for accidents and other incidents of interest, capturing both what happened leading up to the incident and what happened after. Incident videos can be captured automatically, via triggers from g-sensors or the vehicle bus (hard braking, etc.). In more advanced systems events can be generated by Advanced Driver Assistance (ADAS) or Driver Monitoring (DMS) algorithms that detect tailgating, driver distraction, etc. Event videos can also be generated on-demand, either by the fleet manager or the driver. For the fleet manager, it is critical to have the ability to extract segments of video from loop recorded storage (DVR), for any asset or driver, by making a request from the fleet portal. For drivers, a ‘panic button’ feature that can capture video snippets around incidents on the road at any time, empowers them in a variety of scenarios where they are not at fault.

[video src="https://www.lightmetrics.co/wp-content/uploads/2021/05/VideoSensors-1.mp4" /]

While the video in itself provides immense value, augmenting it with more context around incidents increases the value fleets can derive from them. With that in mind, we are excited to announce a couple of enhancements to the way event videos are presented to end-users.

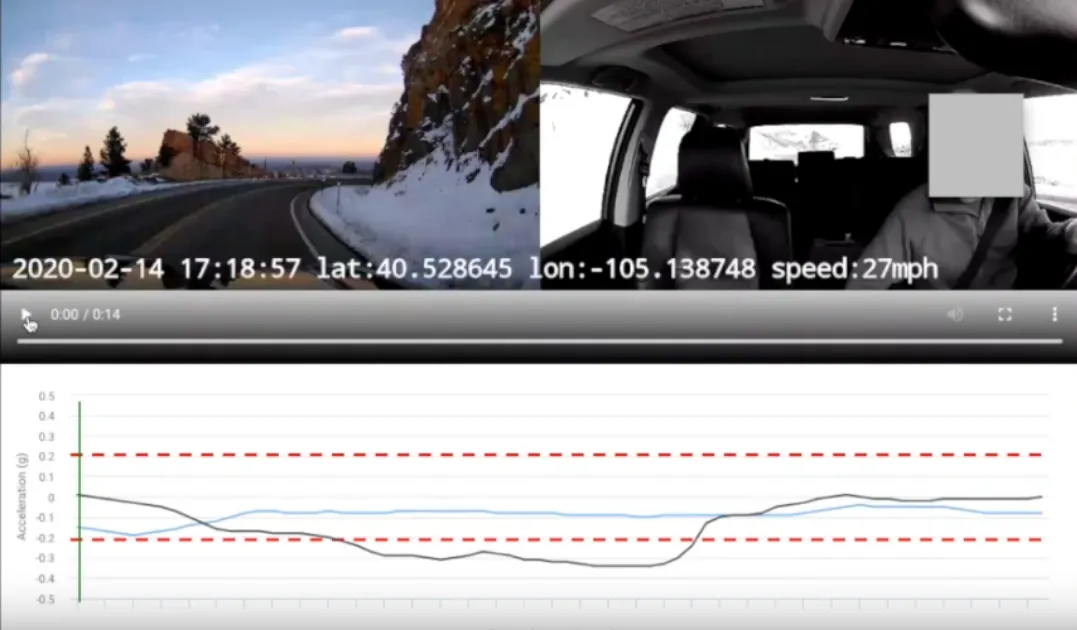

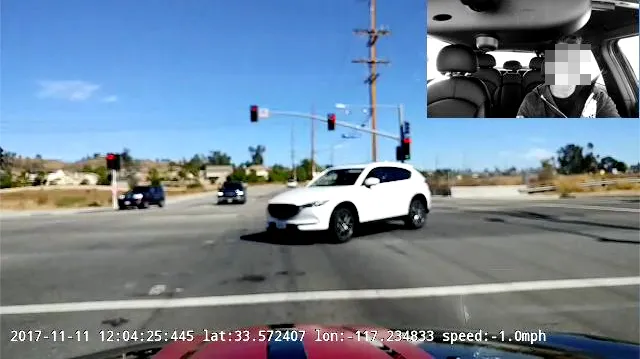

Bi-directional video

A key benefit of bi-directional video, that has been driving the adoption of dual-camera systems in particular, and multi-camera systems in general, are the fact that more views often add up to more context. An accident where the driver was distracted because he was looking at his phone, is at the opposite end of the spectrum from an accident where someone suddenly cuts him off while his eyes were still on the road. The financial implications of this are significant as well, with liability in the former instance being severe. Consequently, there is immense value in recognizing potentially dangerous driver behavior (texting, calling, smoking, etc.) from videos that give a comprehensive view of in-cab and outside, and taking corrective action in advance.

Going hand-in-hand with the launch of our first integrated dual-camera solution, SmartCam, we now support event videos in both side-by-side mosaic and picture-in-picture format of road and driver-facing views, as shown above. This adds to the robust set of configurable parameters that TSPs already use to create solutions that provide the maximum value to fleets while staying within prescribed data upload limits – video duration, quality, resolution, etc.

Sensor profile overlays

In addition to video, our SDK captures a variety of other time-synchronized meta-data around incidents, like 3-axis g-sensor values and speed. As shown in the video above, our platform now enables richer event views where video can be viewed in-sync with g-sensor profiles and speed. For accidents and incidents like hard braking and cornering, this gives an immediate and intuitive feel for the severity of incidents. For us, this is the first step towards building a framework where video becomes the centerpiece of a rich and layered experience around events, that includes a multitude of other data captured from the vehicle bus and other data sources.

While the experience around the video itself is central to the video telematics experience, when the rubber hits the road (literally, in this case), the details around how and when the video is uploaded and accessed by fleets are important as well. The increased payload around video, when compared to conventional telematics data that fleets are used to, necessitates more thoughtful decisions around creation and upload of video. We listened to our partner TSPs around the need to give fleets even more control around when and how videos are uploaded, and out of this came an extension to our existing DVR service – the Enhanced DVR.

Enhanced DVR (E-DVR)

Conventional DVR gives fleet managers the ability to request a video from their admin portal from any vehicle for any time segment, out of a looping video buffer. This gives them the assurance that even if automatic triggers miss an event of interest, they can always retrieve segments of video-on-demand. The duration for which video is accessible is however limited by the amount of onboard storage, with 7-15 days of availability before data is overwritten being typical.

With our E-DVR service, we now provide fleets the ability to store event videos for longer durations, of the order of 2 weeks to a month. Automatically triggered event videos are stored separately from the main DVR buffer, for fleet admins to access at a later point in time. The major use-case we had in mind was the ability to upload event videos in real-time at lower resolutions (e.g 360p or lower), and give fleet managers the ability to request higher resolution (720p or higher) footage around specific incidents that they want to examine in more detail at a later point in time. This could be particularly effective for fleets instituting coaching and review programs with drivers (where the high-resolution video is essential), without compromising on data costs around everyday use. Paired with event severity metrics, this can be powerful tool in optimizing data upload budgets.

We are excited to roll these features out, see them enhance your solutions, and deliver real value to end-users. As always, though, it is still Day 1.