RideView: Backend and API Services

LightMetrics’ advanced video telematics platform RideView is enabled through the combination of an SDK running on an edge device, and backend API services running on the cloud.

LightMetrics’ advanced video telematics platform RideView is enabled through the combination of an SDK running on an edge device, and backend API services running on the cloud. An edge device can be a smartphone, tablet, or a dash camera installed in a vehicle. The edge device runs an application that uses the RideView SDK/client APIs and generates event media and meta-data for a trip. These are then uploaded to the backend and exposed to third-parties using our API services.

Our backend and API services serve as the backbone of our entire offering, tying together all the different components and packaging them for consumption by end-users. Let us take a peek under the hood into the constituent parts that make our backend tick.

Architecture and Usage

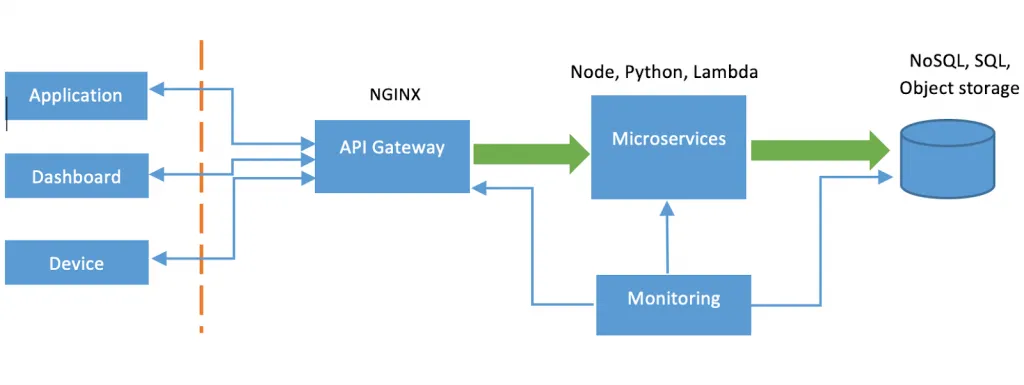

Figure 1 shows the key elements of the Lightmetrics backend. Requests are received from various clients by a reverse proxy server. This additional server is also used to enable request caching and any additional filtering on the requests. After authentication, requests are handled by our microservices. These microservices interact with cloud-hosted databases and storage services.

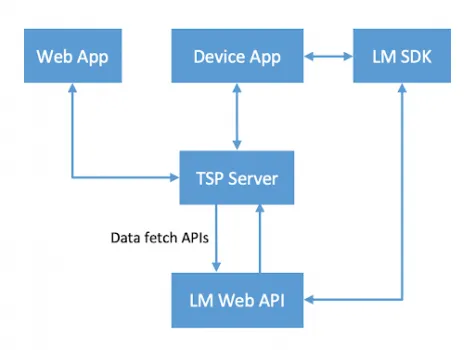

The recommended way of building applications using RideView is illustrated in Figure 2. A device application is built using the RideView device SDK. The SDK communicates with the Lightmetrics API services – the creation of a trip, fetching asset configurations are examples of services invoked by the SDK. Appropriate data is created in the database, which is made available through web API endpoints. LM web APIs are available for consumption, via the TSP server, on the user-facing web application. These APIs provide services to fetch trips, violations, aggregate stats., trip details, and more.

The core tenets around which the backend is architected are:

- Information about a trip should be available immediately and updated frequently.

- Videos and other meta-data around should be available when end-users want to review.

- Readily available diagnostics around connection status, camera mounting, and other points of failure.

The backend has two classes of APIs: internal, consumed by the SDK, and external, that users can consume in their applications (mobile or web). The internal API endpoints primarily support the creation and update of trip and event data. There are two classes of data produced here:

- Trip or event meta-data like (lat, long), speed, time, etc. This is written as a NoSQL document.

- Media data: Incident video clip or image, which is uploaded into S3 object storage.

The other set of APIs provides access to the data produced during the trip and is available for consumption in front-end web or mobile applications. They enable a rich set of features at the fleet, driver, trip, and asset level, which can be combined to create responsive and insightful fleet safety dashboards.

Implementation

The backend services are developed using NodeJS and Python, running as PaaS or elastic computing services on AWS and IBM clouds. The LightMetrics backend relies on a combination of cloud services working in tandem, with resources residing across multiple cloud service providers. A reverse proxy server (NGINX) acts as an API gateway, while the core logic for API endpoints is implemented as microservices with NodeJS. Apart from the database and compute infrastructure, our backend uses several other cloud services like notification, messaging, lambda to enable various features. There are use cases that require function-as-a-service for implementing certain coordinated tasks and we use lambda functions for the same. Lambda functions are a popular serverless framework available on AWS and one can run isolated functions when needed. While lambda functions execute and return the control back, there are situations that demand co-ordinated batch jobs to be executed, made possible with a service called Step function on AWS. The step function feature waits for a job to complete before moving on to the next.

The underlying database service used to store trip data plays a central role in enabling our APIs. The primary data queried and aggregated is the trip metadata and it is stored as a NoSQL document on IBM Cloudant (based on Apache CouchDB). This database service has built-in MapReduce functionality that allows complex queries and aggregations on data without the user needing to worry about scalability and availability. Some of the APIs allow sorting and aggregation of data on different keys and they internally utilize the built-in MapReduce feature. The media data is stored on S3 object storage and accessible through APIs assigned URLs with pre-set expiry periods. The event videos primarily consist of automatically generated clips based on ADAS, DMS, and G-sensor processing on the edge. In addition, they also consist of on-demand requests from the backend (DVR). These can be requested once a trip completes and a push notification is sent to the SDK to fulfill the request. Video upload however is subject to network availability, and the SDK has retry logic built-in for the trip and video data, while associated webhooks (described below) notify users about request fulfillment.

Our API server currently processes greater than 1 million requests per day, across multiple clients in different geographies. An extensive monitoring infrastructure forms the eyes and ears to ensure that the backend is healthy and available at scale. We use the popular ELK (Elasticsearch + Logstash + Kibana) stack to monitor requests. Patterns of requests are monitored regularly and alarms are raised in case unusual patterns are detected. This is in addition to default monitoring tools provided by the cloud service providers themselves.

Key Features

Webhooks

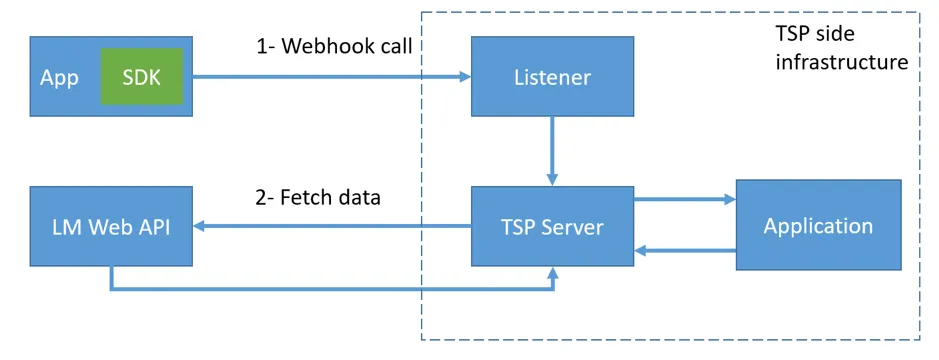

Webhooks are a great way to trigger custom workflows asynchronously for certain events. This allows a user to register a custom endpoint that gets invoked on certain events. Figure 3 illustrates the high-level architecture of the webhook feature. In the current context, an event can be the completion of a trip or availability of an uploaded event video. As an example, a particular workflow needs to be triggered when a trip is completed. This workflow is implemented on the TSP’s server. The TSP registers the webhook (which is a POST REST API endpoint). On completion of the trip (when the ignition is turned off), the SDK invokes the registered webhooks, which in turn executes custom code to trigger the workflow. Currently, we support webhooks for events related to trips, DVR, and diagnostics.

Provisioning and Configurations

The first step in setting up our service is provisioning fleets and assets. We provide APIs to create fleets, upload linked assets, and tag them with the appropriate service level, vehicle duty type, and payment status (pilot/paid). Events generated by our SDK provide extensive configurability, achieved by APIs that configure event thresholds, driver notification control, and event video parameters – duration, resolution, quality, and format.

DVR, Time-lapse DVR, eDVR

The basic functionality that every video telematics system needs to support is the remote request of video clips from devices – also known as DVR. This allows a fleet admin to request a video clip around a particular location or time window within a trip, for reviewing some content of interest – an accident, a driver complaint, etc. The RideView platform supports DVR and two of its variants – Time-Lapse DVR and eDVR. eDVR enables the request of higher-quality versions of already captured event videos remotely. Time-lapse DVR allows for the fast review of the entire video for a trip, by creating and uploading a time-lapse video of the entire trip. As with event videos, all DVR requests come with extensive configurability around video resolution, quality, and format.

Crowdsourced intelligence

The RideView platform, deployed on thousands of vehicles, processes 15 million miles of video, GPS, and sensor data each month (and growing rapidly). Points of interest (POI) like speed and stop signs are extracted from trip documents, aggregated, maintained on a separate SQL DB, and processed to generate our own crowdsourced POI data store. This data is currently consumed through internal APIs in our SDK, helping augment the performance of our ADAS engines in situations where visual detection is challenging. Going forward, we are working on exposing our crowdsourced data as a standalone API service that can be consumed by third parties even outside of the RideView platform.

Our backend stack is continuously evolving to meet the needs of a growing global client base that relies on it to provide stable and scalable solutions, and also new features being added to deliver more value to end-users. Incorporating the latest developments, popular tools, and best practices, while not sacrificing flexibility, stability, and scalability is a challenge, but one we are well-positioned to address.

To learn more about our APIs, write to us at info@lightmetrics.co.